Safety Management Systems

Do You Really Need a Risk Matrix?

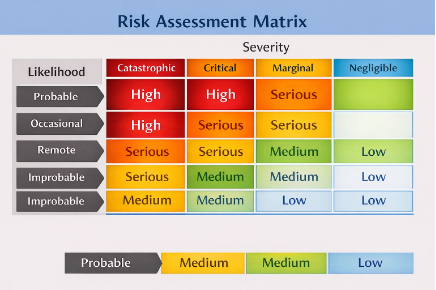

Risk matrices are widely used across aviation to visually evaluate and prioritize risk by plotting likelihood (probability) against severity (consequence). Their intended purpose is to help organizations manage limited resources, focus attention on the most critical hazards, improve decision-making, and support effective mitigation strategies.

However, many organizations rarely pause to ask a more fundamental question: what decision is the risk matrix actually informing—and does it do so reliably?

More importantly, do risk matrices answer the questions safety managers are routinely asked to justify?

The Problem with Conventional Risk Matrices

A substantial body of literature suggests that traditional approaches to risk assessment—particularly qualitative risk matrices—can degrade decision-making rather than improve it. The lack of quantitative rigor, heavy reliance on subjective judgment, and the intuitive appeal of color-coded boxes create the illusion of risk control without providing meaningful decision support.

A key weakness is the over-reliance on Subject Matter Experts (SMEs) to estimate likelihood. While SMEs possess essential operational knowledge, expertise alone does not guarantee accurate probability estimates. Research consistently shows that human judgment is vulnerable to cognitive biases such as overconfidence, anchoring, and excessive focus on recent or vivid events.

Compounding this issue, SMEs are rarely given feedback on the accuracy of past estimates. Without structured feedback loops, estimates do not improve over time—an outcome that directly undermines the SMS principle of continuous improvement.

Without structured analytical tools, data, and training in cognitive bias awareness, SME input becomes inconsistent and unreliable as the primary basis for risk assessment.

The Illusion of Communication

Risk matrices also create an “illusion of communication.” Numerous studies demonstrate that commonly used likelihood terms—such as unlikely, possible, or likely—are interpreted very differently by different individuals.

Ask three safety professionals what “likely” means in numerical terms, and you will receive three different answers. When risk categories lack shared quantitative meaning, discussions about risk acceptance, prioritization, and mitigation become subjective and internally inconsistent.

Why Risk Matrices Fall Short in SMS

From an SMS perspective, risk matrices fail in several critical ways:

Moving Beyond the Matrix

So what should safety managers use instead?

Improve estimation quality

Train those involved in risk assessment to express uncertainty more precisely. Define likelihood terms quantitatively and explicitly account for control effectiveness rather than assuming it.

Use quantitative decision-support tools

Simple quantitative tools can dramatically improve insight. Monte Carlo analysis, as described in Doug Hubbard’s The Failure of Risk Management, can be implemented using Excel and provides far more actionable information than qualitative scoring.

Other effective methodologies include:

Measure control effectiveness

Effective controls reduce risk—but only if they actually work. Control effectiveness cannot be assumed; it must be measured.

The SMICG pamphlet Measuring Safety Performance – Guidelines for Service Providers provides practical guidance for developing safety performance indicators linked to controls. The Design, Implementation, Monitoring, and Evaluation (DIME) framework developed by Applied Mental Health Research Group is another valuable tool for assessing whether controls are producing the intended safety outcomes.

Final Thought

If the goal of SMS is informed decision-making and continuous improvement, safety managers must move beyond reliance on simplistic risk matrices. Data-driven methods, structured analysis, and measurable controls provide far better insight into actual risk exposure.

Risk management should illuminate decisions—not conceal uncertainty behind a grid of colored squares.

Go Back Risk matrices are widely used across aviation to visually evaluate and prioritize risk by plotting likelihood (probability) against severity (consequence). Their intended purpose is to help organizations manage limited resources, focus attention on the most critical hazards, improve decision-making, and support effective mitigation strategies.

However, many organizations rarely pause to ask a more fundamental question: what decision is the risk matrix actually informing—and does it do so reliably?

More importantly, do risk matrices answer the questions safety managers are routinely asked to justify?

- Should we spend $1,000,000 to move this risk from “yellow” to “green”?

- Are our existing controls actually effective?

- Is this level of risk acceptable in relation to our safety objectives?

- If we invest $X, should we reasonably expect risk to be reduced—and by how much?

The Problem with Conventional Risk Matrices

A substantial body of literature suggests that traditional approaches to risk assessment—particularly qualitative risk matrices—can degrade decision-making rather than improve it. The lack of quantitative rigor, heavy reliance on subjective judgment, and the intuitive appeal of color-coded boxes create the illusion of risk control without providing meaningful decision support.

A key weakness is the over-reliance on Subject Matter Experts (SMEs) to estimate likelihood. While SMEs possess essential operational knowledge, expertise alone does not guarantee accurate probability estimates. Research consistently shows that human judgment is vulnerable to cognitive biases such as overconfidence, anchoring, and excessive focus on recent or vivid events.

Compounding this issue, SMEs are rarely given feedback on the accuracy of past estimates. Without structured feedback loops, estimates do not improve over time—an outcome that directly undermines the SMS principle of continuous improvement.

Without structured analytical tools, data, and training in cognitive bias awareness, SME input becomes inconsistent and unreliable as the primary basis for risk assessment.

The Illusion of Communication

Risk matrices also create an “illusion of communication.” Numerous studies demonstrate that commonly used likelihood terms—such as unlikely, possible, or likely—are interpreted very differently by different individuals.

Ask three safety professionals what “likely” means in numerical terms, and you will receive three different answers. When risk categories lack shared quantitative meaning, discussions about risk acceptance, prioritization, and mitigation become subjective and internally inconsistent.

Why Risk Matrices Fall Short in SMS

From an SMS perspective, risk matrices fail in several critical ways:

- They provide only a snapshot in time, not a dynamic view of risk.

- Likelihood estimates are highly unstable and rarely validated.

- They offer no direct feedback on control effectiveness.

- They do not clearly show which organizational objectives are at risk.

- They do not support determinations of whether risk is acceptable or reasonably reduced.

Moving Beyond the Matrix

So what should safety managers use instead?

Improve estimation quality

Train those involved in risk assessment to express uncertainty more precisely. Define likelihood terms quantitatively and explicitly account for control effectiveness rather than assuming it.

Use quantitative decision-support tools

Simple quantitative tools can dramatically improve insight. Monte Carlo analysis, as described in Doug Hubbard’s The Failure of Risk Management, can be implemented using Excel and provides far more actionable information than qualitative scoring.

Other effective methodologies include:

- Decision Trees

- Failure Mode and Effects Analysis (FMEA)

- Think Reliability’s Cause Mapping

Measure control effectiveness

Effective controls reduce risk—but only if they actually work. Control effectiveness cannot be assumed; it must be measured.

The SMICG pamphlet Measuring Safety Performance – Guidelines for Service Providers provides practical guidance for developing safety performance indicators linked to controls. The Design, Implementation, Monitoring, and Evaluation (DIME) framework developed by Applied Mental Health Research Group is another valuable tool for assessing whether controls are producing the intended safety outcomes.

Final Thought

If the goal of SMS is informed decision-making and continuous improvement, safety managers must move beyond reliance on simplistic risk matrices. Data-driven methods, structured analysis, and measurable controls provide far better insight into actual risk exposure.

Risk management should illuminate decisions—not conceal uncertainty behind a grid of colored squares.